AI NPU software development

Optimizing AI model inference performance and harnessing

remarkable hardware efficiency to pave the way towards world-leading capabilities

BOS NPU AI SW Development Verticals

Providing the most dexterous SW stack and tools

for AI model bring-up and optimization for the world’s leading model inference performance

-

AI Model Compiler

Development

(Frontend/Backend)

-

AI Model Development

& Performance Optimization

-

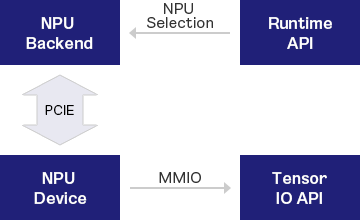

Runtime &

Tensor IO API

Development

-

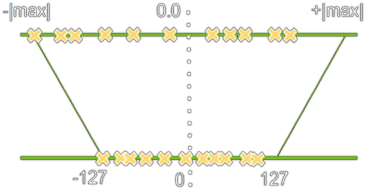

Quantization

Algorithms &

PTQ/QAT Tools

-

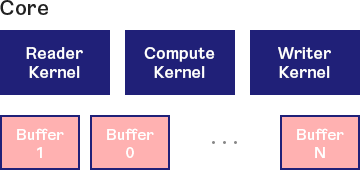

AI Model Ops

Kernel

Development in

C++/Python

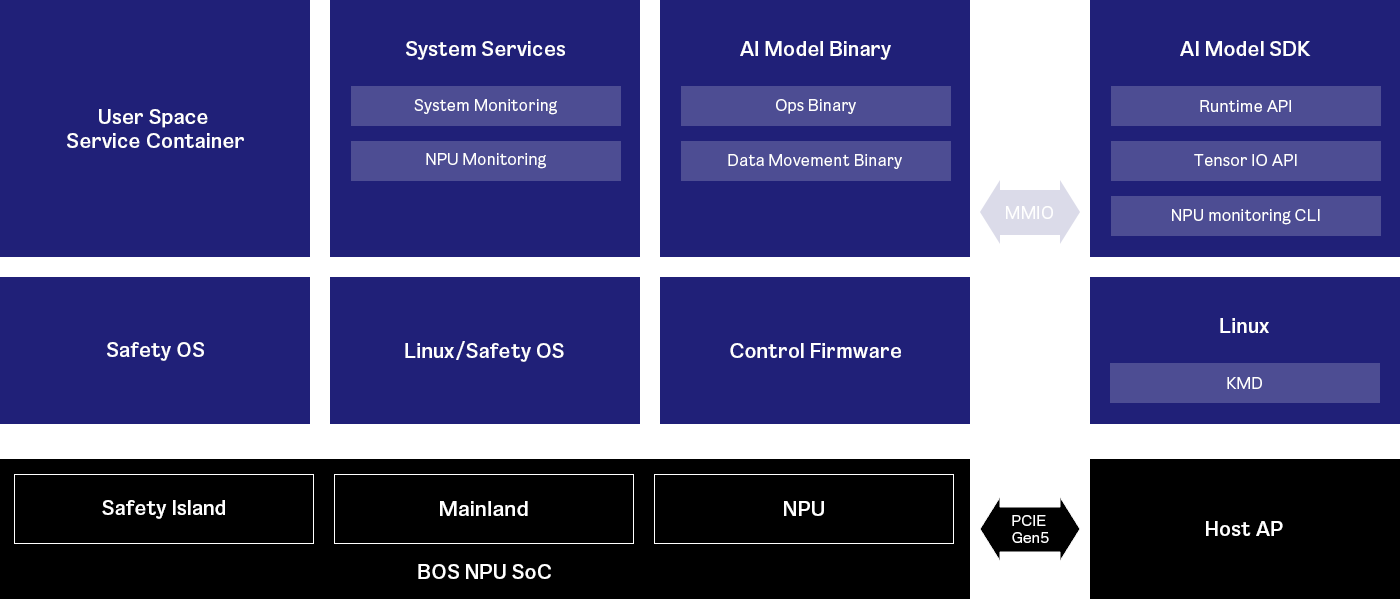

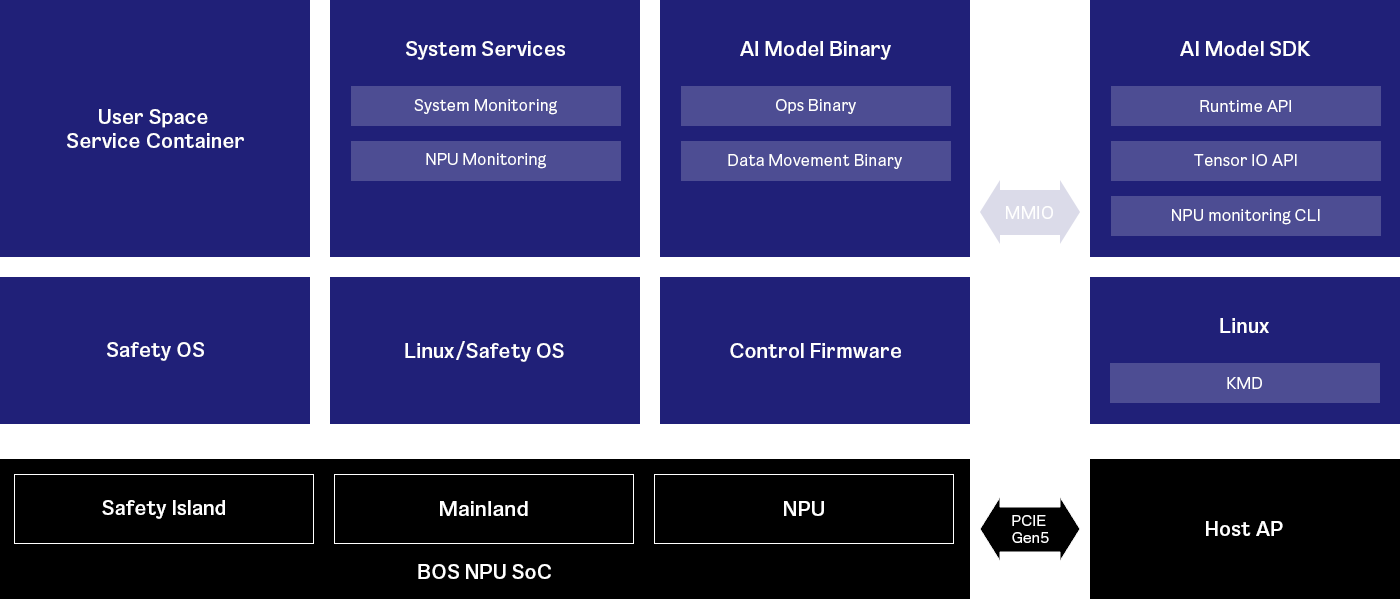

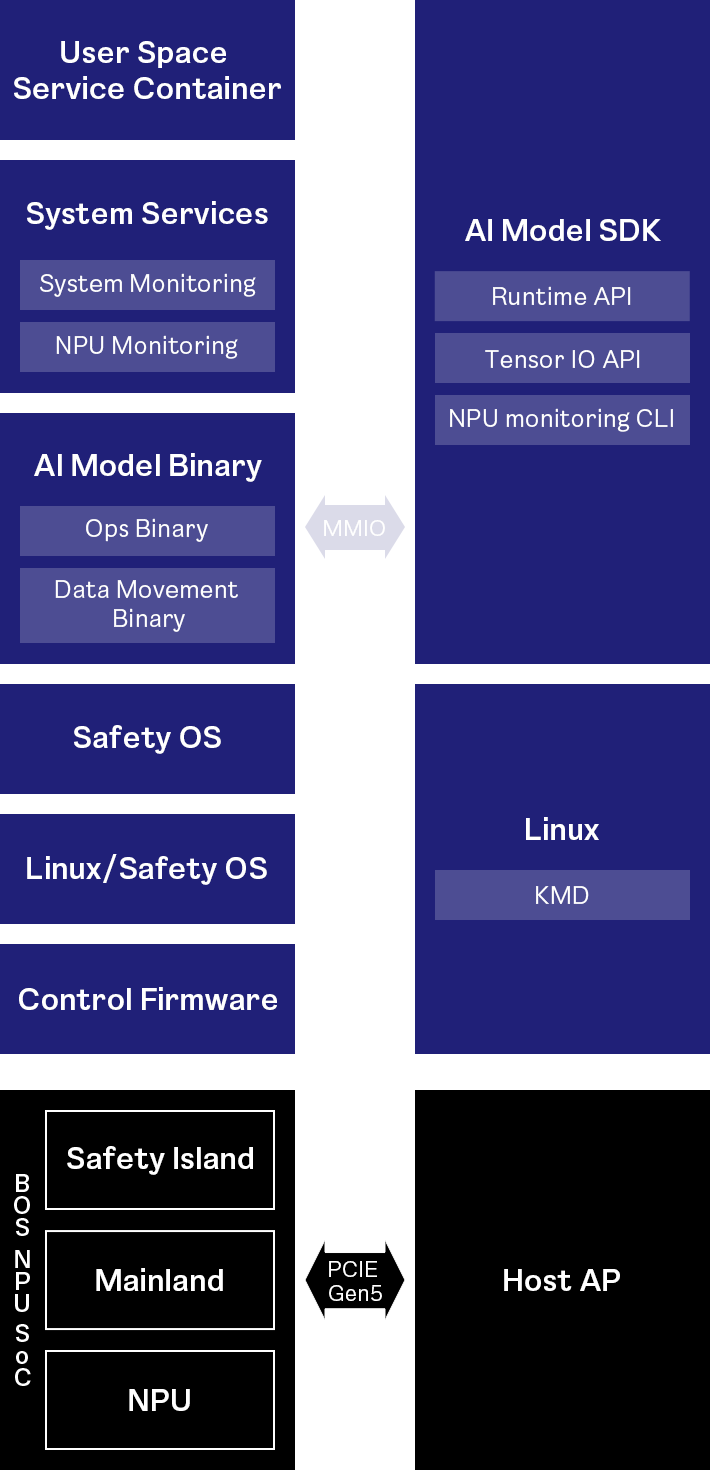

Full NPU SoC SW Stack

We are developing a full stack SW for top AI model inference performance and utilization

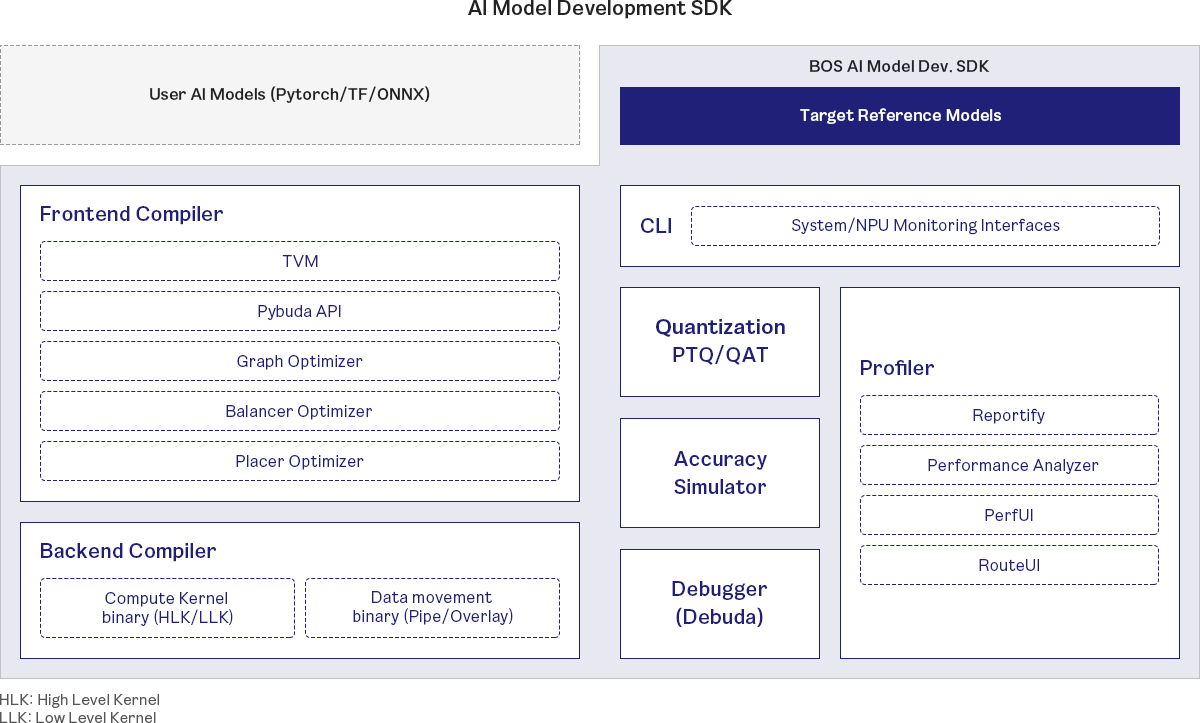

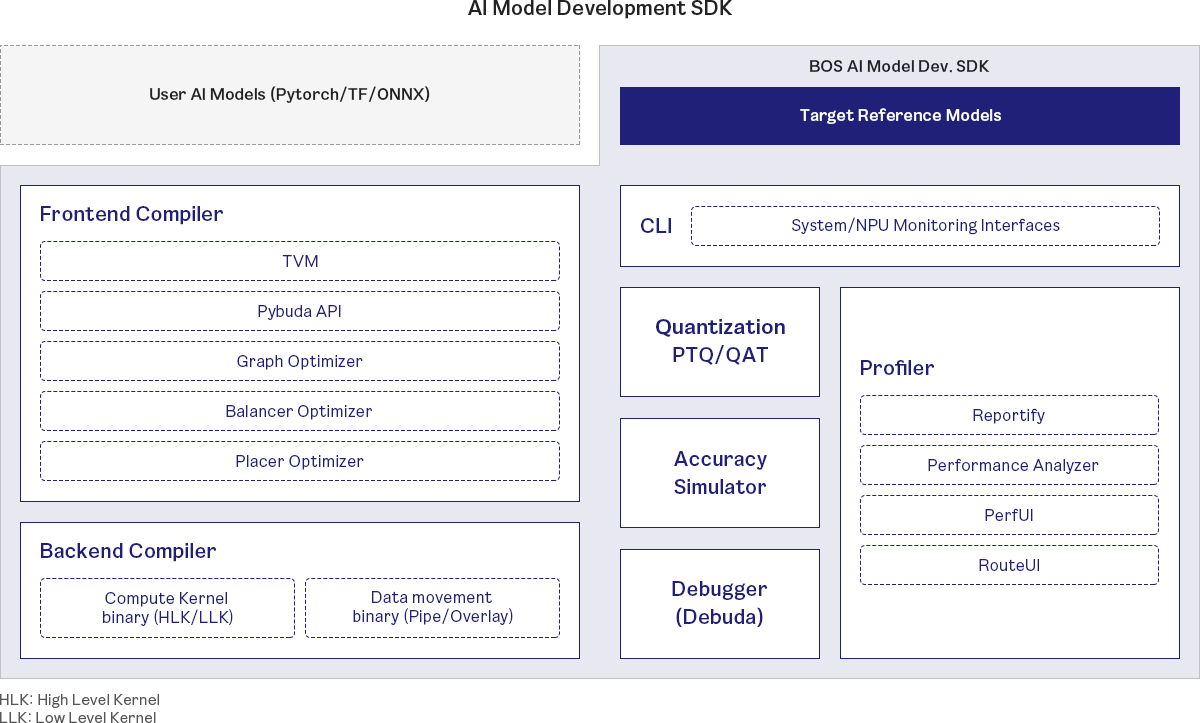

AI Model Development SDK

We are developing all-around AI model development SDK for the easiest and fastest AI model deployment

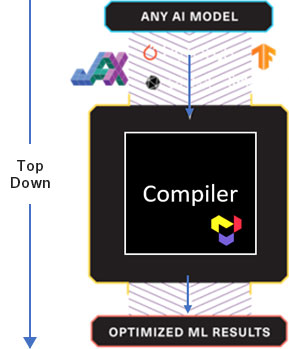

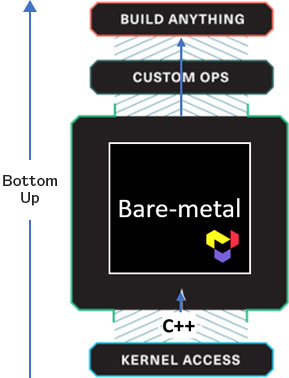

All-around Programming Model

We are developing NPU SW using two-distinct

NPU SW stacks and optimizing for “Generality” and “Performance”

- Out of the box, run many AI models, small overhead incurred

- Continuous Performance improvement

- Support for all major frameworks including PyTorch and Tensorflow

- Targeted AI models running higher performance at higher utilization

- Completely open hardware allows you to program non-ML applications.

- Useful for HPC, C++ environments and low-level AI model development.

Source: Official website of Tenstorrent Inc. BOS and Tenstorrent are collaborating on joint AI SW development.

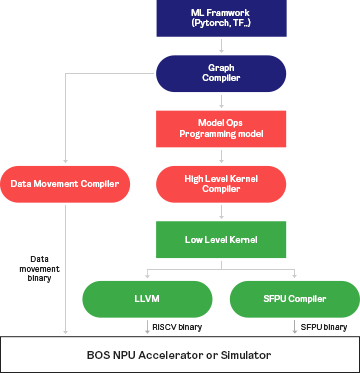

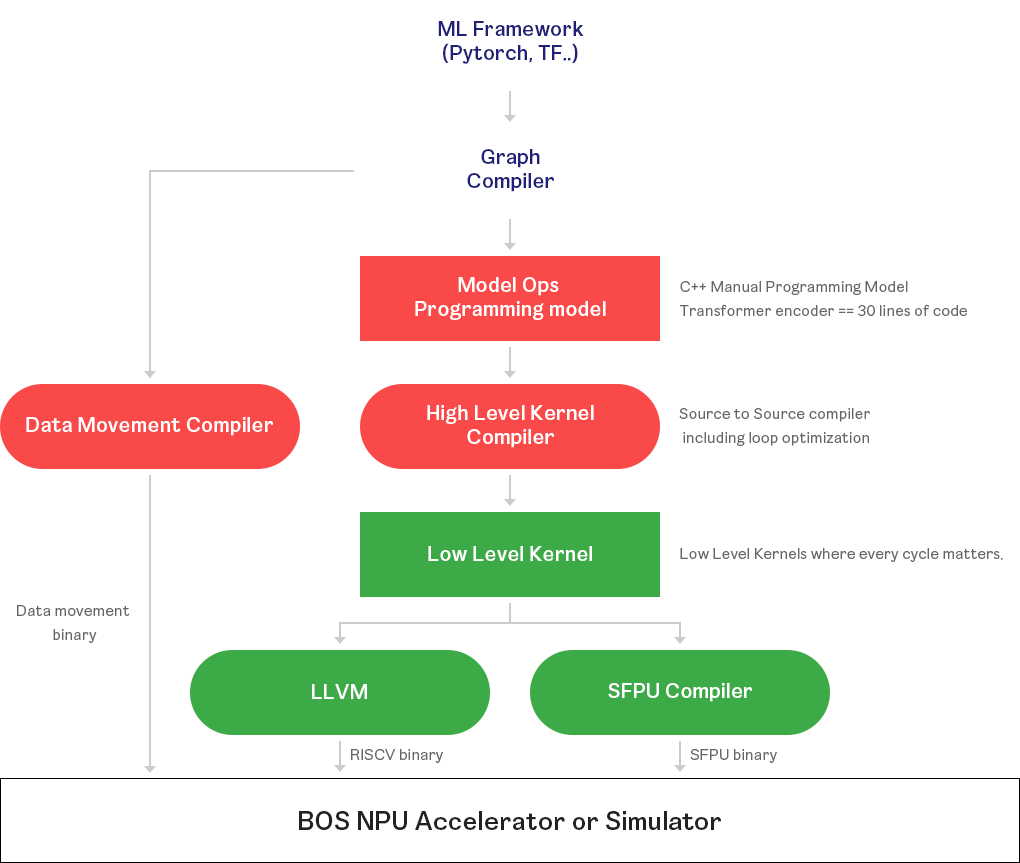

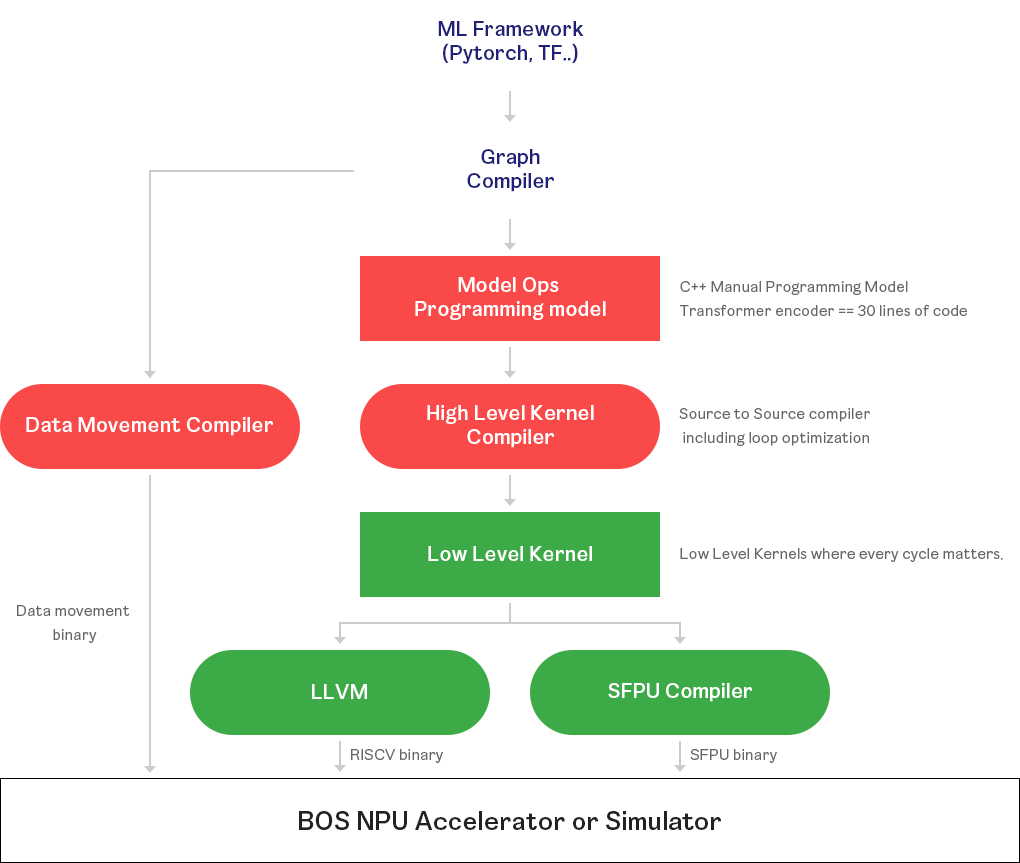

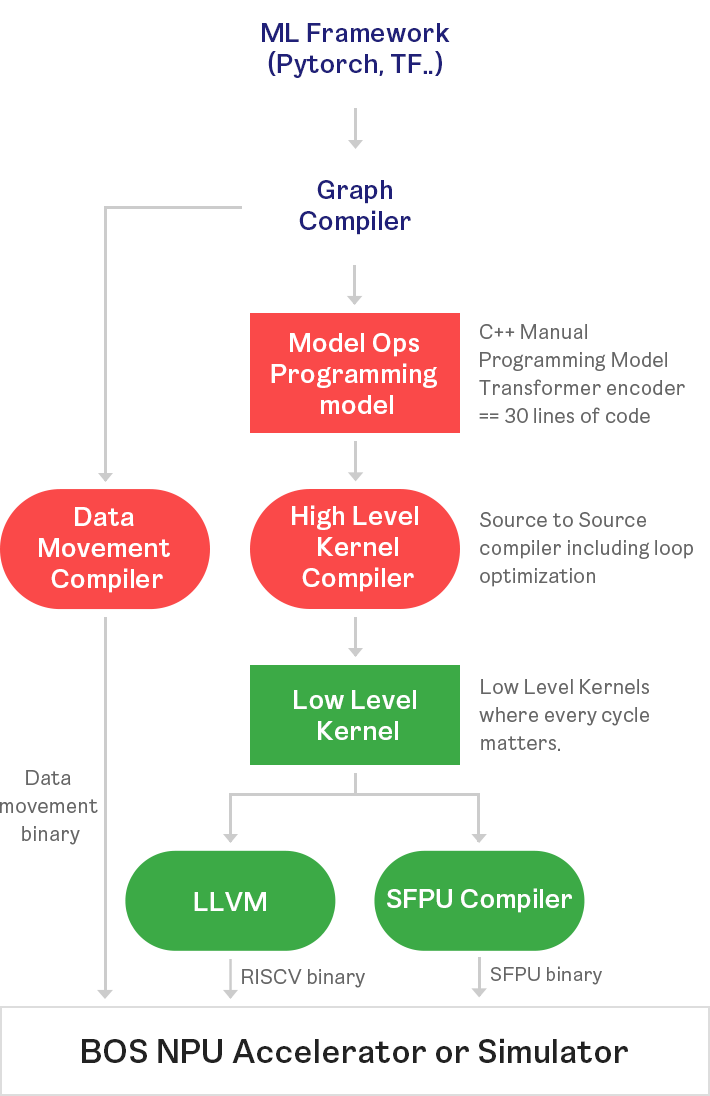

Pre-trained AI Model Compilation Flow

We are developing the most flexible and compatible frontend

& backend AI model compiler for the world’s best AI model inference performance

Source: Official website of Tenstorrent Inc. BOS and Tenstorrent are collaborating on joint AI SW development.